Artificial Intelligence (AI) has rapidly infiltrated every aspect of our lives, from personalized recommendations to self-driving cars. However, its potential for good comes with a dark side: its growing role in cyberattacks. As AI technology becomes more sophisticated, so too does its ability to be weaponized by malicious actors.

One of the most significant ways AI is being used in cyberattacks is through automated phishing campaigns. Traditional phishing attacks often rely on manual labor to craft convincing emails and target specific individuals. With AI, attackers can generate vast quantities of personalized phishing emails in a fraction of the time. AI algorithms can analyze vast datasets of personal information to identify vulnerabilities and tailor messages to maximize their effectiveness.

Moreover, AI can be used to create deepfakes, highly realistic synthetic media that can be used to deceive individuals and organizations. For example, AI can be used to create deepfake videos of CEOs or other high-profile individuals, making it easier for attackers to trick employees into divulging sensitive information.

AI is also being employed to automate the process of discovering and exploiting vulnerabilities in computer systems. AI-powered tools can quickly scan networks for weaknesses and identify potential targets. Once a vulnerability is discovered, AI can be used to develop and launch automated attacks, overwhelming defenses and maximizing the damage caused.

Tools like FraudGPT could be used to create a seemingly legitimate email from a trusted source, containing a malicious attachment that appears to be a harmless document. When a user opens the attachment, the malware is executed, allowing the attacker to gain unauthorized access to their system. Similarly, WormGPT could be used to generate realistic-looking code for a new type of malware, making it more difficult for security software to detect and block

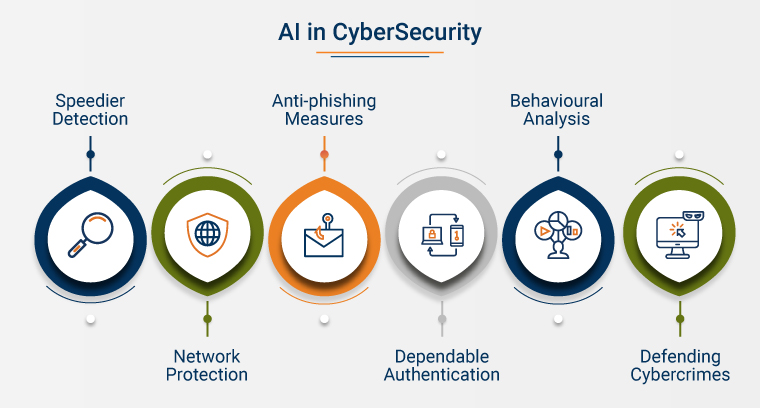

The ability of AI to generate highly convincing and realistic content poses a significant challenge for cybersecurity professionals. Traditional security measures, such as signature-based antivirus software, may struggle to detect and block AI-generated threats. As a result, organizations must invest in more advanced security solutions that can detect and mitigate threats based on behavior and context, rather than relying solely on signatures.

Some of the checks we can do now to keep a check on AI related cybercrimes are:

- Validate AI-generated code before use.

- Stay on top of the latest AI trends and adjust your security strategy accordingly

- Leverage AI to strengthen your security

- Use AI tools responsibly

- Be wary of suspicious voice or video messages

- Educate your employees on the security threats that AI can pose

In conclusion, AI has the potential to revolutionize the world for the better. However, it is also a powerful tool that can be misused by malicious actors. As AI technology continues to evolve, it is imperative that we develop strategies to mitigate the risks associated with its use in cybercrime. By understanding the ways in which AI is being weaponized and taking proactive steps to protect ourselves, we can help ensure a safer and more secure digital future.